Episode Transcript

Transcripts are displayed as originally observed. Some content, including advertisements may have changed.

Use Ctrl + F to search

0:00

It's a new ghost burger

0:02

from Carl's Jr. It's a

0:04

juicy, char-boiled Angus Beat Burger.

0:06

Eeee-fi- Melty Ghost Pepper Cheese.

0:08

Eeee-fi- Crispy Bacon Trippy Spicy

0:10

Soul-Scorching Sauce Burger. Peekity Spicy

0:12

Sauce! And that's the ghost

0:15

that haunts the recording booth. I also

0:17

like Carl's Jr. Burger. You've said that

0:19

before, Jeb. Save me one? Here. I

0:22

don't have any tea. Grab a ghost burger

0:24

for a limited time. Only at Carl's Jr.

0:26

Beat Burger, Get Burger. Available for a limited

0:28

time at participating restaurants. So,

0:33

Emily Guskin. Because you're the

0:35

deputy polling director here at The Post,

0:39

I want to know what it's like

0:41

to be asked about a poll over

0:43

the phone. Like, let's say

0:45

that I have been chosen to answer

0:47

a poll that's being conducted by The

0:49

Washington Post. What does that look

0:51

like? Well, first your

0:53

phone's going to ring for this poll. Okay, let's

0:55

just do it for real. Okay, so, doo doo

0:58

doo, ring ring ring ring. So I pick up

1:00

the phone. Hello? Hello, I'm Emily. We're not selling

1:02

anything. Just doing an opinion poll on interesting subjects

1:04

in the news. I'm sorry, I'm a little busy

1:07

right now. How long is this going to take?

1:09

Very short. We'd love to have your opinion. May

1:11

I please speak from our team? This

1:14

is she. Wonderful. Again, my

1:16

name is Emily. Before we continue, are you

1:18

driving or doing anything that requires your full

1:20

attention right now? Uh,

1:23

I mean, I'm like washing the dishes,

1:25

so I guess not. So, super, we

1:27

will go ahead then. That's

1:32

my co-hosts, Martine Powers, speaking with

1:35

Emily Guskin, our deputy polling director.

1:37

If you're like me or Martine, you

1:39

may be wondering about all these political

1:42

polls you've been hearing about right now.

1:44

So, we brought Emily into our

1:47

studio to help us make sense

1:49

of election polling this year, and

1:52

to also give us an idea of what it

1:54

actually feels like to take a poll over the

1:56

phone. Are you registered to vote in

1:58

the District of Columbia or not? this

8:00

really fundamental aspect of polling

8:02

called sampling. And sampling is

8:04

figuring out that sample of

8:06

people who will reach out

8:08

to and those people respond to the

8:10

poll questions. And the

8:12

sample that we reach out to

8:15

should be representative of the total

8:17

universe that we're surveying. So if

8:19

it's, say, Virginia voters, the sample

8:21

that we're reaching out to, which

8:23

is not every single voter in

8:26

Virginia, it's just people that we

8:28

randomly chose to respond to the

8:30

survey needs to reflect everyone there.

8:32

So we want to ensure that

8:34

the population is reflective. An

8:39

analogy I like to use is making

8:41

soup. If you're making soup

8:43

for your friends who are coming over, you're

8:45

not going to taste the entire bowl before they

8:48

get there because then you've got nothing to serve

8:50

them. And you would be very, very full. And

8:52

you'd be a crappy hostess. If

8:55

you take a ladle and stir it up so

8:57

that you can mix it up and make sure

8:59

that the salt is all over and

9:01

that the beans are all over and not just

9:03

in one part totally, and then take a tiny

9:05

taste and be like, yeah, that's the ticket. And

9:07

you make sure in the spoon that you've got

9:09

a little bean and you got a little piece

9:12

of carrot and you make sure that it's got

9:14

the, you know, that it's a nice mix.

9:18

It's a little, little hot bath of

9:20

public opinion. And if it needs more salt,

9:22

you go back and you get more

9:24

salt in there. Okay.

9:26

So that helps me understand what a sample

9:29

is. But then getting back

9:31

to this idea of this poll that's

9:33

out in the field, once you

9:36

get the responses back from people, then

9:38

what happens? Then we get a

9:40

data set that comes back

9:42

to us and it just

9:44

looks like a list of numbers

9:47

and nonsense to most people. And

9:50

we are able to analyze that data

9:52

by looking at the different responses, but

9:54

also breaking them down by crosstab. So

9:56

at the end of all those issue

9:58

questions, we also ask people, you

12:00

look and it's just a two percentage

12:02

point difference, that's not huge. You

12:04

can be an educated consumer and look at what the

12:07

margin of error is in a poll and know

12:09

that it's a close race. Right

12:12

now, the presidential race is a pretty

12:14

close contest nationally and in several key

12:16

states, but in some states, it's not

12:18

as close. We did a poll in

12:21

Virginia and we found Harris leading Trump

12:23

by eight percentage points. And we can

12:25

comfortably say that she's leading there because

12:27

that eight percentage point difference was larger

12:29

than twice our error margin in that

12:31

poll. So

12:34

you're talking about how we should know

12:36

to trust a poll. And

12:38

I think one other kind of marker

12:40

of trust is the name

12:42

that's attached to that poll. We hear

12:44

these different organizations like Ipsoceana,

12:47

Pew, and I don't know really

12:49

what any of these places are

12:51

or what they do other than

12:53

I guess release a lot of

12:56

polls, but how should we be

12:58

thinking about the names that are attached to these

13:00

polls? There's a lot of polling

13:02

outlets out there and a lot of them are

13:04

good. I don't expect people to

13:06

really remember who to trust

13:08

and who not to, but I think there's a few

13:11

things that people can look for when they're reading polls.

13:13

If it's done by a big media organization,

13:15

even one that's not the post, you can

13:18

be pretty confident in them trying to do a

13:20

really good job, right? New

13:22

York Times poll, Wall Street Journal poll, an Associated Press

13:25

poll, like these are all and

13:27

then TV networks too. And

13:30

you can also see how much information they've

13:32

shared about that poll. So if there is

13:34

a methodology statement that talks about margin of

13:37

error and sample size and things of that

13:39

nature, that can give you some more confidence.

13:41

Also look at the question wording. A poll

13:43

should release the wordings of their questions. You

13:46

don't have to have a master's degree in

13:48

polling methods to understand if a question is

13:51

fairly written and if something is

13:53

leading or trying to get someone to respond in a

13:55

poll. In a certain way. But

13:57

also it's really important to just look and

13:59

compare. There are outlets that share a bunch

14:02

of different polls in one space, and you

14:04

can look and see. Sometimes

14:06

the outlier actually ... Sometimes an

14:09

outlier is not an outlier, right? If a race

14:11

changes a lot, maybe the

14:13

race changed. But if there's been

14:15

like five polls in one state

14:17

and four of them show a

14:20

two-point difference or a one-point difference or

14:22

an even, and one shows a 10-point

14:24

lead, that 10-point lead might

14:26

just be an outlier, but it could also

14:28

show that the race is changing. So it's

14:31

good to keep watching, to see poll averages.

14:33

The Washington Post has a poll average in

14:35

a bunch of swing states

14:37

and also nationally, and you can compare just

14:39

to that average to see what you think

14:41

and to see what others are measuring. After

14:49

the break, Martine and Emily talk

14:51

about the public's trust issues with

14:53

polls and what pollsters

14:55

have done to improve since 2016. We'll

14:59

be right back. It's

15:24

a new ghost burger from Carl's Jr. It's a juicy

15:26

charbroiled Angus beef burger. Melty

15:49

ghost pepper cheese. Crispy

15:52

bacon, trippy, spicy, soul-scorching sauce

15:54

burger. And

15:57

that's the ghost that haunts the recording booth. Also

16:00

like us, Junior Burgers. You've said

16:02

that before, Jeb. Save me one?

16:04

Here. I don't have any

16:06

tea. Grab a ghost burger for a limited

16:08

time. Only at Carl's Junior. Need burger? Get

16:11

burger. Available for a limited time at participating

16:13

restaurants. I

16:16

want to acknowledge the elephant in the room

16:18

here, because I think when

16:20

you ask a lot of people about polling,

16:22

whether polling can be trusted, they

16:24

will often bring up instances

16:27

or situations or elections where they felt like

16:29

the polls weren't accurate. And one that comes

16:31

to mind, obviously, for many people is 2016,

16:33

where there is this

16:35

widespread belief or feeling that

16:38

people didn't see Trump's

16:41

win coming, or that the polling didn't

16:43

reflect that, and that that's a reason

16:45

to not trust the polls

16:47

going forward. What would you say to someone who thought

16:49

that? In

16:52

2016 and 2020, polling was kind of far

16:55

off. We're a self-critical group of folks' pollsters,

16:57

and we meet every year at a conference

16:59

and basically analyze what has happened in previous

17:01

years and try to improve on what we've

17:03

done before. And we really want to get

17:06

it right. Political horse-shares polling is

17:08

particularly hard, because we need to figure

17:10

out exactly who is turning out to

17:12

vote in an election. And we don't

17:14

know until after election day who voted.

17:17

In 2016, a lot of polls showed Hillary

17:20

Clinton ahead of Trump, but there

17:22

were some that we're a lot closer to, and

17:24

it was a very close election. One

17:27

way we've tried to improve since then

17:29

is waiting by education. So

17:31

we talk about ensuring that

17:33

the population is reflective. That

17:35

election in particular, and subsequent

17:38

elections, it's seen that

17:40

people with higher educations tend to vote

17:42

Democratic, and that wasn't necessarily the case

17:44

with white people in previous elections. So

17:47

making sure we have more balance and

17:49

are able to do that. We also

17:51

look at non-response issues more, trying

17:54

to ensure that there's no

17:57

group of people that's systematically

17:59

not responding. to surveys, so it's important

18:01

to look at that because this

18:03

country chooses its president not

18:05

based on popular vote nationally

18:08

but on the electoral map,

18:10

which is very confusing and

18:12

means that we have to do a lot

18:14

more work to see what's happening. That

18:17

I think was part of the reason that 2016 was

18:19

surprising to so many people. Hillary Clinton was

18:21

ahead in the popular vote in so many

18:23

polls leading up to the election, that

18:26

we don't choose our president in the

18:28

US based on popular vote, but there

18:30

was a lot more polling

18:32

being done at the national level. It

18:35

was a really close election and we've

18:37

tried to improve on those results. In

18:39

2018, polls are better, in 2020 they

18:42

were worse, and our goal

18:44

is to improve and to get that right. And

18:47

it seems to me at least that by

18:49

and large, like in recent elections, polls

18:51

really have gotten it right. In

18:54

2018, 2022, the midterms, you know

18:56

that there was a real matchup between polling

18:58

and what actually transpired in the election. We

19:00

like to get it right. What

19:03

are some of the common mistakes that you see

19:05

in people interpreting polls incorrectly? I

19:08

think it's good to have a skeptical hat on

19:10

when you're seeing something from an advocacy

19:13

group or someone who has skin in the game. They

19:15

can ask questions in certain ways to get

19:17

the answers that they want. News

19:20

pollsters don't want to do that.

19:23

Candidates and political partisans often will

19:26

release a poll if it looks

19:28

good for their candidate, but

19:30

not if it looks bad for

19:32

their candidate and they'll be choosy about that. You

19:35

might say, oh, this congressional candidate

19:37

is up 10 points according to them, but they

19:39

might have had 15 polls before

19:41

then where it was neck and neck and they

19:43

just kept that to themselves because they're not a

19:45

public pollster and they don't really have to release

19:48

that. What you're saying is trying to be

19:50

aware of the folks who are

19:52

putting out this poll. Whether they have skin in the

19:54

game, whether they're trying to advocate for something or

19:56

are they're on one side or the other and

19:58

that they have a good candidate. an

20:01

incentive to create a skewed picture. Absolutely, and

20:03

I get a lot of that in my

20:05

inbox. Those people are sending

20:07

journalists a lot of stuff all the time.

20:09

I can imagine. One more

20:11

thing that has come up relatively recently

20:13

that I've been wondering about is

20:15

this term prediction models, which

20:18

to me sounds like a different way of saying poll,

20:20

right? That like, this is

20:22

the thing that's going to tell you who's going to win and who's going

20:24

to lose. What is a prediction

20:26

model? And how

20:28

should we be thinking about those? Prediction

20:31

models take polls and put them in

20:33

a computer. This sounds like

20:35

explaining something to my dad, but just put

20:38

into a computer, the machine will take care

20:40

of it. Pips and bops and do the

20:43

math. No, my dad

20:45

is very good at understanding these things. He

20:47

does a good job of explaining stuff to

20:49

the other people in their 55-plus community about

20:52

polling. It's like he's doing God's work. Prediction

20:55

models and polls are different. Prediction

20:58

models use polls along with

21:00

other indicators that they figure

21:03

out and put in to

21:05

look at what might happen in the future.

21:08

Polls are snapshots of what is happening

21:10

right now. We're focused on today with

21:12

a poll. Prediction models,

21:15

you have to believe in the indicators

21:17

that they're expecting to have an impact

21:19

on the future. So at

21:21

the post, we don't have a prediction

21:23

model, but we average polls, which is

21:25

a bit different. So we're not looking

21:27

into the future. We're trying to use what

21:30

we have to give us the best picture of

21:32

what is happening today. So we

21:34

include both state and national polls, and

21:36

we weigh them using information about who

21:38

is putting that poll out to give

21:40

us the best estimation of the true

21:43

state of that race right as it

21:45

is right now. So

21:48

you've talked about how polls

21:50

work, why

21:52

polls can be trusted when they come

21:54

from reputable sources and when they have

21:56

large enough sample sizes, and how polls

21:59

can be trusted. polls can be really

22:01

insightful. But I'm

22:03

curious more from a self-care

22:06

standpoint, how much

22:08

do you think people should be spending

22:10

their day-to-day following the ups and downs

22:12

of this poll and that poll and

22:15

whether or not a

22:17

half-points percentage change in one direction or

22:19

the other, how much do you

22:21

think that is informing the

22:25

wider public in a useful way versus

22:27

maybe it's too much, maybe you shouldn't,

22:30

be living and dying by whether or not

22:32

your preferred candidate happens to have

22:34

gotten a slightly more positive poll in the last 24

22:37

hours? So I've been

22:39

working in polling since 2008, that

22:42

was my first presidential election, working

22:44

in polling, and

22:47

let me tell you that self-care

22:49

is really important. And

22:52

living and dying by your candidate's

22:54

polls can be exhausting and probably

22:57

not healthy, but please keep reading

22:59

our stuff. I

23:01

think it's good to be aware of

23:03

it, but be aware of it in

23:06

context. And still polling is the best

23:08

way to measure what a population thinks.

23:11

And I think it's important to have

23:13

compassion for voters. We have

23:15

undecided voters, that's a fact. That

23:18

can be- And I think some people find that

23:20

very frustrating. I think people find that really hard

23:23

to believe. Like at this point, how can you

23:25

not be sure whether or not you want to

23:27

vote for Donald Trump? Either you do or you

23:29

don't, and what more information do you need? People

23:32

are complicated and some of them are low information,

23:34

but a lot of them aren't. And they have,

23:36

if you voted Republican for your entire life, it

23:39

might be hard for you to consider voting for

23:42

a Democrat now, and vice versa. The

23:44

people who respond to our surveys and the

23:47

Americans overall, I'm so thankful to them. Because

23:49

a lot of us are in our bubbles,

23:51

and a lot of us only talk to

23:53

people who we see eye to eye on

23:56

or have similar life experiences to us. And

23:59

a poll can open. up our eyes

24:01

to how other people think and other

24:03

people feel and how they live their

24:05

lives. And there's

24:07

a lot of people out there who

24:09

aren't like us and they deserve to

24:11

have their voices heard just as much

24:14

and maybe even more so if they're more interesting

24:16

and they're a bit unsure about who

24:19

they're going to vote for and why

24:21

they're unsure about who they're going to

24:23

vote for. I think it's a great

24:25

responsibility for us to measure people's opinions

24:28

because no one else is really doing

24:30

that than pollsters and we see

24:32

a lot of news articles that quote experts

24:34

but I think the American people really are

24:36

the experts and they're the people that we

24:39

do need to hear from and understand how

24:41

they feel and think and why they're doing

24:43

what they're doing. Emily,

24:47

I never thought that you would be able to

24:50

make me feel emotional about polling. Thank

24:52

you so much for sharing that. Thank you so

24:54

much. Emily

24:57

Guskin is the deputy polling director for

24:59

The Post. She spoke to

25:01

my co-host, Martin Powers. Before

25:15

I leave you, I wanted to share some news

25:17

that I've been following today. We're

25:20

still learning about how devastating Hurricane

25:22

Helene has been. The

25:24

death toll is now over 150 and

25:27

that number is expected to rise. My

25:30

colleague Brianna Sacks is on the ground right

25:32

now in southern Georgia in a

25:35

town called Valdosta. This

25:37

area was still recovering from a

25:39

hurricane last year when Helene

25:42

hit. Brianna was inside

25:44

of a fast food restaurant

25:46

when she met Jesse Johnson-Chiroux.

25:49

Like her neighbors, Johnson-Chiroux has

25:51

been dealing with trying to feed

25:53

her family and she hasn't

25:55

had power for a week. The

28:00

kind of immunity is reserved

28:02

for official presidential acts. This

28:04

filing is intended to show Trump can

28:06

still face trial by arguing

28:09

he was acting as a political

28:11

candidate, not as the president. And

28:14

there could be a political fallout

28:16

from this filing. My colleague, politics

28:18

reporter Aaron Blake will unpack that

28:20

and more tomorrow in his podcast

28:22

The Campaign Moment. Usually

28:24

you can find The Campaign Moment on

28:26

Fridays in our post-reports feed, but we'll

28:28

have a different episode here tomorrow. So

28:31

head to The Campaign Moment podcast feed

28:34

and subscribe so you'll be sure to catch it. That's

28:39

it for post-reports. Thanks for listening.

28:42

Today's show was produced by Ariel Plotnick

28:44

with help from Bishop Sand. It

28:47

was mixed by Sam Baer and edited

28:49

by Rina Flores. Thanks

28:51

also to Scott Clement and Brianna Sacks.

28:54

I'm Elahe Izzadi. We'll be

28:57

back tomorrow with more stories from The Washington Post.

29:26

Bye bye.

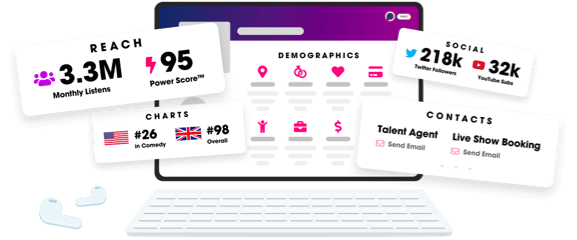

Unlock more with Podchaser Pro

- Audience Insights

- Contact Information

- Demographics

- Charts

- Sponsor History

- and More!

- Account

- Register

- Log In

- Find Friends

- Resources

- Help Center

- Blog

- API

Podchaser is the ultimate destination for podcast data, search, and discovery. Learn More

- © 2024 Podchaser, Inc.

- Privacy Policy

- Terms of Service

- Contact Us